Cloud is no longer just the backbone of digital infrastructure—it’s the operating system for enterprise reinvention. However, most cloud strategies remain stuck in legacy thinking: procurement-led sourcing, cost-centric optimization, and infrastructure-focused design. As AI moves from experimentation to execution, these outdated models are breaking down—slowing time-to-value, increasing integration debt, and creating governance blind spots.

To compete in the AI-native era, enterprise leaders must reframe cloud as a strategic platform—not a tech utility. It is the foundation for unlocking data, scaling automation, and operationalizing AI across workflows. The cloud of today isn’t just about uptime—it’s about differentiated business outcomes.

Exhibit 1: The cloud evolution continuum

Source: HFS Research, 2025

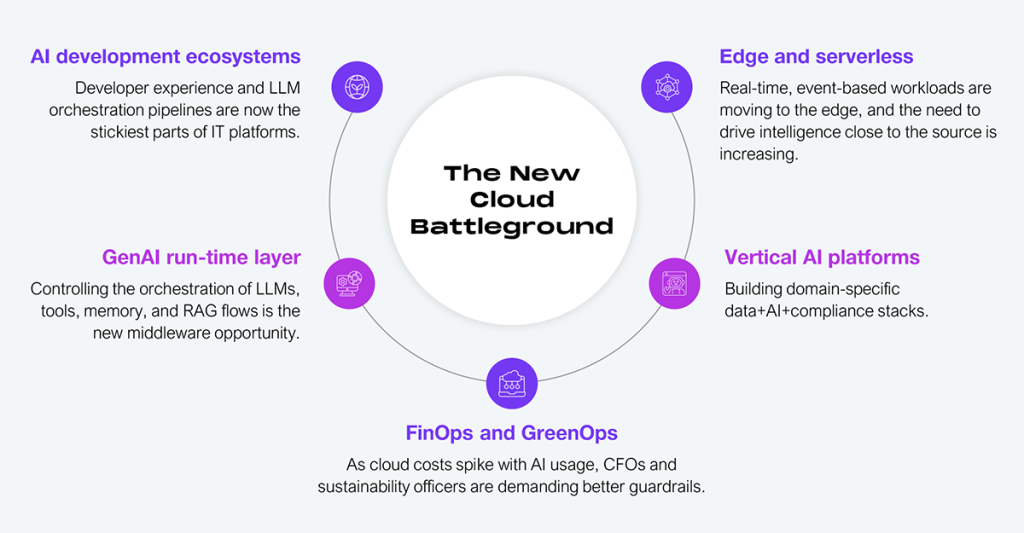

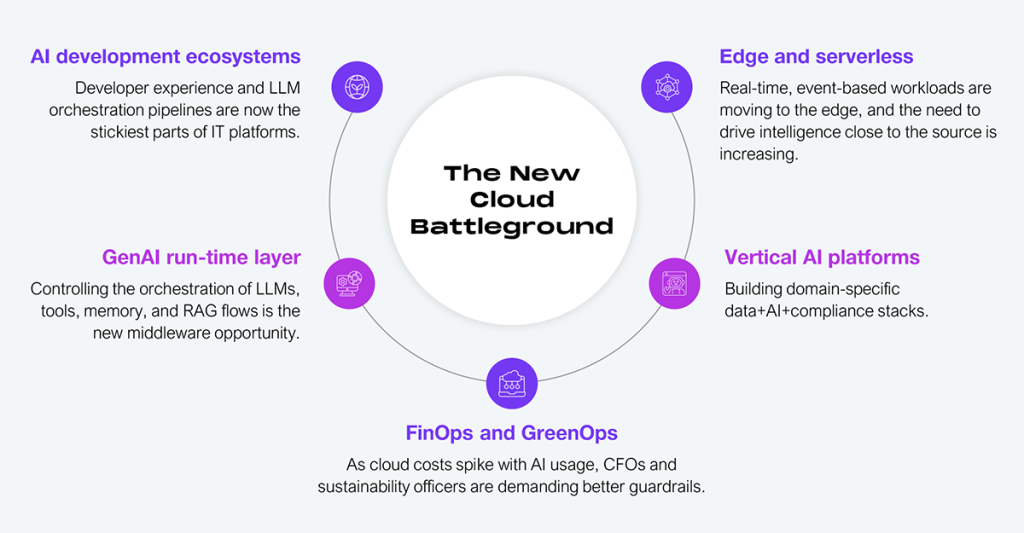

The hyperscaler dogfight has moved to higher ground

The cloud battleground has shifted among the likes of AWS, Azure, and Google Cloud. It’s no longer about availability zones or compute pricing. Hyperscalers are now competing to own the next layer of the enterprise stack—the application runtime for GenAI and industry-specific intelligence. This will bring in fresh opportunities for leverage alongside new lock-in dimensions and decision-making for enterprises.

Exhibit 2: The hyperscaler battleground has shifted to AI-native value orchestration

Source: HFS Research, 2025

For instance, new platforms such as Cloudflare, Vercel, and Hugging Face are redefining the cloud value chain—one layer above the virtual machine. They aren’t trying to compete on raw compute but are shaping how AI workloads are executed, served, and governed. These platforms appeal to developers with simplicity, to enterprises with speed, and to AI builders with toolchain flexibility. Rather than replacing the hyperscalers, they’re rerouting value around them.

Enterprises must reframe their cloud strategy now

Legacy cloud sourcing models were built for lift-and-shift migrations and cost control—not for orchestration of AI pipelines, sovereign data residency, and multi-cloud observability. Enterprises must abandon narrow procurement-led frameworks and embrace a future where cloud is embedded into every aspect of business design, delivery, and decision-making.

Rethinking the cloud strategy starts with fundamental mindset shifts:

- Cloud is not a destination but a capability: Enterprises must architect for portability, not placement—designing infrastructure and data flows that can operate across hyperscalers, private clusters, and edge locations.

- Cloud is not a cost center but a business enabler: Budgets must be linked to GenAI ROI and business impact, not just usage and uptime. FinOps must evolve into a value-driven governance discipline.

- Cloud is not an IT responsibility but a board-level concern: Decisions about AI models, cloud latency, security trade-offs, and regulatory risks affect enterprise competitiveness and must be owned accordingly.

- Cloud is not a toolset but the enterprise nervous system: The cloud stack now determines how: AI agents access data, processes are automated in real time, and teams co-pilot with machines.

Enterprises must revisit sourcing, architecture, and governance with a fundamentally different lens, tightly linking cloud strategy with business strategy.

For example, a leading global pharma company re-architected its clinical data platform on a hybrid cloud foundation to support GenAI-driven document summarization for trial reporting, reducing the processing time by over 40% and increasing compliance traceability.

Push for platform-led partnerships: What enterprise technology leaders must demand from cloud vendors

- Design for differentiated value, not just uptime: Hyperscalers must go beyond utility infrastructure to deliver domain-specific, compliance-ready, and vertically integrated platforms. Cloud strategies must start with business problems and work backward to modular platform solutions.

- Operationalize the GenAI stack: Vendors must natively support orchestration components such as vector databases, RAG frameworks, prompt templates, context management, model evaluation, and memory. Look for providers with integrated model ops and lifecycle monitoring, not just model hosting APIs.

- Expose transparent sustainability and usage telemetry: Embedded dashboards should show emissions impact, LLM token usage, data locality, and usage spikes without the need for third-party plug-ins.

- Shift toward outcome-based commercial models: Enterprises should advocate for pricing aligned to business value and performance metrics (e.g., query throughput, model precision), not just hours or data storage consumed. Token-based pricing and shared-savings contracts are early examples of this shift.

Engineer for intelligence: What enterprise leaders should expect from system integrators (SIs)

- Build AI-first process transformation, not just UI overlays: SIs must move from building GenAI front ends to embedding AI into the core business logic—infused into decisions, forecasting, compliance workflows, and knowledge retrieval chains.

- Deliver governance-first orchestration: Enterprises need visibility into how GenAI systems are performing, when they fail, what’s being cached or retrieved, and how decisions are being logged. SIs must offer structured observability, explainability tooling, and AI-specific security patterns.

- Create modular, hyperscaler-native IP accelerators: Reusable templates, prompt chains, retrieval connectors, and agent orchestration modules must run directly on AWS Bedrock, Azure AI Studio, or Google Vertex—not as abstracted black boxes.

- Design cross-cloud architectural governance: SIs must define policies, compliance boundaries, and failover logic across hybrid, sovereign, and multi-cloud environments, enabling trust in distributed AI workloads.

The cloud–SI collaboration must evolve to co-own outcomes

It’s time for hyperscalers and SIs to stop working in parallel and start co-owning the outcomes. Together, they must:

- Build plug-and-play runtime environments for GenAI pipelines that integrate logging, API orchestration, RAG, model switching, and policy enforcement.

- Develop pre-validated solution bundles by industry (e.g., compliant underwriting copilots, AI-powered maintenance advisors) with pricing transparency and risk posture clarity.

- Offer dynamic consumption models with shared incentives around token optimization, latency, and prediction accuracy.

- Stand up joint cloud + AI innovation pods staffed with cloud engineers, AI ethicists, and domain specialists to co-design production-grade AI experiences with clients.

The Bottom Line: Re-evaluate your cloud strategy before your AI ambitions outgrow it.

Cloud is no longer just a delivery layer; it’s the connective fabric between AI ambitions and enterprise execution. As enterprises push AI deeper into workflows, decisions, and customer experiences, cloud is the denominator that decides what scales, what stays secure, and what creates competitive advantage. The cloud of yesterday delivered speed and savings. The cloud of today must deliver trust, outcomes, and control.

Enterprises that don’t reassess their cloud models will fall behind as they lack the foundation to operationalize it.

Did someone say cloud is passé? If anything, it’s more relevant than ever.